So far, we have covered the process creation and termination in Linux. In this post and the next one, we will go through the process scheduling. The task scheduler or process scheduler or simply scheduler is the part of the kernel that makes the decision on which process can be executed at any given time, keeping in mind best utilization of the available resources. The scheduler in Linux is preemptive – i.e. the scheduler wouldn't mind partially stopping an executing task and run a different task which is better suited. The preempted task is eventually scheduled to continue from the point where it stopped.

Scheduling policy is the strategy followed by the multitasking OS to maximize efficiency. In Linux or for that matter in most of the OS, the processes are separated into two kinds: IO-bound and processor-bound. An IO-bound process is one that depends on constant interrupt from the IO devices – like a word processor that depends on keyboard entry. A processor-bound task is the one in which the instruction sequence is executed consecutively on the processor until it is either preempted or finished. Linux tries to provide more importance to the IO-bound processes because of the user-interactive nature of it. This is done without compromising much on the background tasks that requires processor time. Linux scheduler is also a dynamic priority-based scheduler. All processes start with a base priority and (inversely) based on how often the processes use up their allocated timeslices without being interrupted by any IO device.

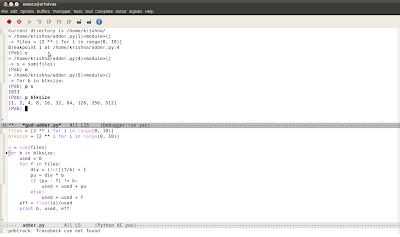

The Linux scheduler is defined in

kernel/sched.c. The scheduling algorithm is called

Completely Fair Scheduler (CFS), developed by Hungarian hacker,

Ingo Molnar. Completely Fair Scheduler is a O(log n) algorithm that depends on a

red-black tree which implements a timeline of future tasks ready for execution. Before CFS, Linux used

O(1) scheduler. In that, a bitmap based

priority queue data structure used of the priority based scheduling class is given by:

struct rt_prio_array {

DECLARE_BITMAP(bitmap, MAX_RT_PRIO+1); /* include 1 bit for delimiter */

struct list_head queue[MAX_RT_PRIO];

};

Here

MAX_RT_PRIO is the number of priority levels which is equal to 140. For user-space, the number of priority levels is given by

MAX_USER_RT_PRIO = 100. The main scheduling data structure is run-queue defined as

struct rq. This structure actually belongs to the previous O(1) scheduling algorithm, except for a few changes. One important change is the introduction of the reference to

struct cfs_rq and the leaves of the

cfs_rq given by

struct list_head leaf_cfs_rq_list.

The

struct cfs_rq consists of

struct load_weight is a simple structure with two unsigned longs used in load balancing.

struct load_weight load;

unsigned long nr_running;

struct rb_root tasks_timeline;

struct rb_node *rb_leftmost;

nr_running variable gives the number of processes currently running.

tasks_timeline is implemented as a red-black tree as already mentioned, with a reference to its root. The leftmost node of the red-black tree is also tracked. This points to the most eligible task that can for running (see enque method declared in sched_class).

CFS also eliminates the need for the priority queue by introducing two new structures into

struct task_struct. They are:

struct sched_entity and

struct sched_class. The important part of

struct sched_entity are:

struct sched_entity {

struct load_weight load;

struct rb_node run_node;

struct list_head group_node;

...

}

Here

rb_node is the node of the red-black tree. The struct sched_class contains pointers to different functions that are required to implement the CFS scheduler. It consists of enqueue and dequeue functions to add and remove the

struct task_struct to the

rq, by changing the status runnable of the task. check_preempt_curr function that ensures fairness (F of CFS) by checking whether the current task is pre-emptable or not.

set_curr_task function to set the current task as running in the given CPU. The function

prio_changed that gives information about change in priority in a dynamic priority driven scheduling. The average time for scheduling a task is given by:

return (u64)sysctl_sched_time_avg * NSEC_PER_MSEC / 2;

Here NSEC_PER_MSEC stands for nanoseconds per microsecond. One observation that can be made is that CFS scheduling algorithm in Linux tries to stretch the accuracy to the scale of nanoseconds.

Since Linux allows preemptive scheduling, the CPU time is divided into several time slices. After every timeslice context switching takes place. Timeslice length is usually a variable in CFS. When to schedule a new task is determined in

sched_clock_tick() function in

kernel/sched_clock.c or by

resched_task() call made through change of priority requested by the user (using

nice() system call and much more) or by

sched_yield() system call.

In the next post, we will see how spinlocks and rcutree are used in scheduling. We will also look at the different scheduling policies in Linux.